By: Catherine Clifford

View the original article here

Brandon Bell | Getty Images News | Getty Images

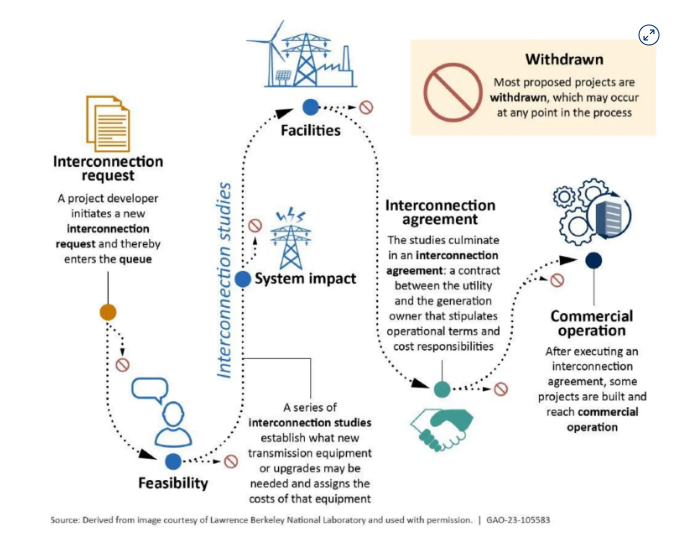

Building new transmission lines in the United States is like herding cats. Unless that process can be fundamentally improved, the nation will have a hard time meeting its climate goals.

The transmission system in the U.S. is old, doesn’t go where an energy grid powered by clean energy sources needs to go, and isn’t being built fast enough to meet projected demand increases.

Building new transmission lines in the U.S. takes so long — if they are built at all — that electrical transmission has become a roadblock for deploying clean energy.

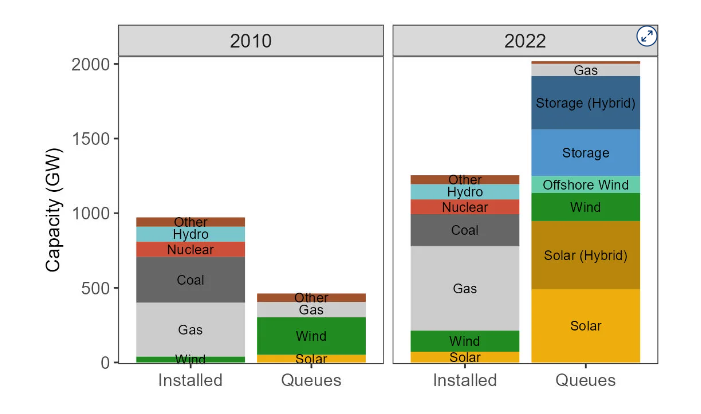

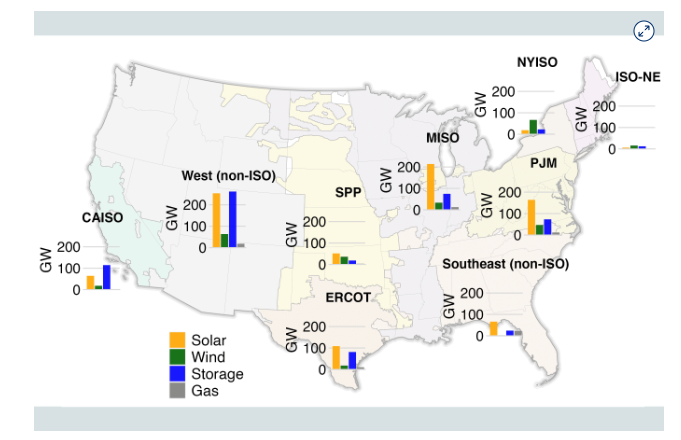

“Right now, over 1,000 gigawatts worth of potential clean energy projects are waiting for approval — about the current size of the entire U.S. grid — and the primary reason for the bottleneck is the lack of transmission,” Bill Gates wrote in a recent blog post about transmission lines.

The stakes are high.

Herding cats with competing interests

Building new transmission lines requires countless stakeholders to come together and hash out a compromise about where a line will run and who will pay for it.

There are 3,150 utility companies in the country, the U.S. Energy Information Administration told CNBC, and for transmission lines to be constructed, each of the affected utilities, their respective regulators, and the landowners who will host a line have to agree where the line will go and how to pay for it, according to their own respective rules.

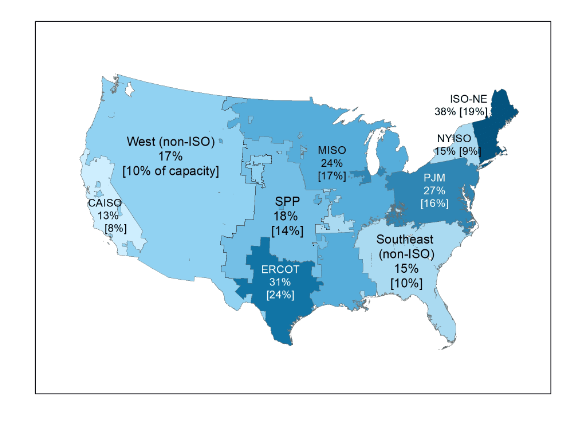

Aubrey Johnson, a vice president of system planning for the Midcontinent Independent System Operator (MISO), one of seven regional planning agencies in the U.S., compared his work to making a patchwork quilt from pieces of cloth.

“We are patching and connecting all these different pieces, all of these different utilities, all of these different load-serving entities, and really trying to look at what works best for the greatest good and trying to figure out how to resolve the most issues for the most amount of people,” Johnson told CNBC.

What’s more, the parties at the negotiating table can have competing interests. For example, an environmental group is likely to disagree with stakeholders who advocate for more power generation from a fossil-fuel-based source. And a transmission-first or transmission-only company involved is going to benefit more than a company whose main business is power generation, potentially putting the parties at odds with each other.

The system really flounders when a line would span a long distance, running across multiple states.

States “look at each other and say: ‘Well, you pay for it. No, you pay for it.’ So, that’s kind of where we get stuck most of the time,” Rob Gramlich, the founder of transmission policy group Grid Strategies, told CNBC.

“The industry grew up as hundreds of utilities serving small geographic areas,” Gramlich told CNBC. “The regulatory structure was not set up for lines that cross 10 or more utility service territories. It’s like we have municipal governments trying to fund an interstate highway.”

This type of headache and bureaucratic consternation often prevent utilities or other energy organizations from even proposing new lines.

“More often than not, there’s just not anybody proposing the line. And nobody planned it. Because energy companies know that there’s not a functioning way really to recover the costs,” Gramlich told CNBC.

Photographer: David Paul Morris/Bloomberg via Getty Images

Who benefits, who pays?

Energy companies that build new transmission lines need to get a return on their investment, explains James McCalley, an electrical engineering professor at Iowa State University. “They have got to get paid for what they just did, in some way, otherwise it doesn’t make sense for them to do it.”

Ultimately, an energy organization — a utility, cooperative, or transmission-only company — will pass the cost of a new transmission line on to the electricity customers who benefit.

“One principle that has been imposed on most of the cost allocation mechanisms for transmission has been, to the extent that we can identify beneficiaries, beneficiaries pay,” McCalley said. “Someone that benefits from a more frequent transmission line will pay more than someone who benefits less from a transmission line.”

But the mechanisms for recovering those costs varies regionally and on the relative size of the transmission line.

Regional transmission organizations, like MISO, can oversee the process in certain cases but often get bogged down in internal debates. “They have oddly shaped footprints and they have trouble reaching decisions internally over who should pay and who benefits,” said Gramlich.

The longer the line, the more problematic the planning becomes. “Sometimes its three, five, 10 or more utility territories that are crossed by needed long-distance high-capacity lines. We don’t have a well-functioning system to determine who benefits and assign costs,” Gramlich told CNBC. (Here is a map showing the region-by-region planning entities.)

Johnson from MISO says there’s been some incremental improvement in getting new lines approved. Currently, the regional organization has approved a $10.3 billion plan to build 18 new transmission projects. Those projects should take seven to nine years instead of the 10 to 12 that is historically required, Johnson told CNBC.

“Everybody’s becoming more cognizant of permitting and the impact of permitting and how to do that and more efficiently,” he said.

There’s also been some incremental federal action on transmission lines. There was about $5 billion for transmission-line construction in the IRA, but that’s not nearly enough, said Gramlich, who called that sum “kind of peanuts.”

The U.S. Department of Energy has a “Building a Better Grid” initiative that was included in President Joe Biden’s Bipartisan Infrastructure Law and is intended to promote collaboration and investment in the nation’s grid.

In April, the Federal Energy Regulatory Commission issued a notice of proposed new rule, named RM21-17, which aims to address transmission-planning and cost-allocation problems. The rule, if it gets passed, is “potentially very strong,” Gramlich told CNBC, because it would force every transmission-owning utility to engage in regional planning. That is if there aren’t too many loopholes that utilities could use to undermine the spirit of the rule.

What success looks like

Gramlich does point to a couple of transmission success stories: The Ten West Link, a new 500-kilovolt high-voltage transmission line that will connect Southern California with solar-rich central Arizona, and the $10.3 billion Long Range Transmission Planning project that involves 18 projects running throughout the MISO Midwestern region.

“Those are, unfortunately, more the exception than the rule, but they are good examples of what we need to do everywhere,” Gramlich told CNBC.

Map courtesy MISO

In Minnesota, the nonprofit electricity cooperative Great River Energy is charged with making sure 1.3 million people have reliable access to energy now and in the future, according to vice president and chief transmission officer Priti Patel.

“We know that there’s an energy transition happening in Minnesota,” Patel told CNBC. In the last five years, two of the region’s largest coal plants have been sold or retired and the region is getting more of its energy from wind than ever before, Patel said.

Great River Energy serves some of the poorest counties in the state, so keeping energy costs low is a primary objective.

“For our members, their north star is reliability and affordability,” Patel told CNBC.

transmission lines, energy grid, clean energy

Great River Energy and Minnesota Power are in the early stages of building a 150-mile, 345 kilovolt transmission line from northern to central Minnesota. It’s called the Northland Reliability Project and will cost an estimated $970 million.

It’s one of the segments of the $10.3 billion investment that MISO approved in July, all of which are slated to be in service before 2030. Getting to that plan involved more than 200 meetings, according to MISO.

The benefit of the project is expected to yield at least 2.6 and as much as 3.8 times the project costs, or a delivered value between $23 billion and $52 billion. Those benefits are calculated over a 20-to-40-year time period and take into account a number of construction inputs including avoided capital cost allocations, fuel savings, decarbonization and risk reduction.

The cost will eventually be borne by energy users living in the MISO Midwest subregion based on usage utility’s retail rate arrangement with their respective state regulator. MISO estimates that consumers in its footprint will pay an average of just over $2 per megawatt hour of energy delivered for 20 years.

But there is still a long process ahead. Once a project is approved by the regional planning authority — in this case MISO — and the two endpoints for the transmission project are decided, then Great River Energy and Minnesota Power are responsible for obtaining all of the land use permits necessary to build the line.

“MISO is not going to be able to know for certain what Minnesota communities are going to want or not want,” Patel told CNBC. “And that gives the electric cooperative the opportunity to have some flexibility in the route between those two endpoints.”

For Great River Energy and Minnesota Power, a critical component of engaging with the local community is hosting open houses where members of the public who live along the proposed route meet with project leaders to ask questions.

For this project, the utilities specifically planned the route of the transmission to run along a previously existing corridors as much as possible to minimize landowner disputes. But it’s always a delicate subject.

Map courtesy Great River Energy

“Going through communities with transmission, landowner property is something that is very sensitive,” Patel told CNBC. “We want to make sure we understand what the challenges may be, and that we have direct one-on-one communications so that we can avert any problems in the future.”

At times, landowners give an absolute “no.” In others, money talks: the Great River Energy cooperative can pay a landowner whose property the line is going through a one-time “easement payment,” which will vary based on the land involved.

“A lot of times, we’re able to successfully — at least in the past — successfully get through landowner property,” Patel said. And that’s due to the work of the Great River Energy employees in the permitting, siting and land rights department.

“We have individuals that are very familiar with our service territory, with our communities, with local governmental units, and state governmental units and agencies and work collaboratively to solve problems when we have to site our infrastructure.”

Engaging with all members of the community is a necessary part of any successful transmission line build-out, Patel and Johnson stressed.

At the end of January, MISO held a three-hour workshop to kick off the planning for its next tranche of transmission investments.

“There were 377 people in the workshop for the better part of three hours,” MISO’s Johnson told CNBC. Environmental groups, industry groups, and government representatives from all levels showed up and MISO energy planners worked to try to balance competing demands.

″And it’s our challenge to hear all of their voices, and to ultimately try to figure out how to make it all come together,” Johnson said.